Ethical AI and Privacy Series: Article 2, The Regulations

As artificial intelligence technologies continue to advance, the legal community is working to pass legislation meant to govern its responsible use. The use of AI has permeated industries in almost every sector, raising important questions about privacy, data protection, and accountability.

In this second article of our series on AI, we delve deeper into the legal landscape surrounding these technologies. Understanding the evolving legal requirements and establishing a framework for managing them is crucial for organizations to navigate the ethical and regulatory challenges surrounding AI.

Broad Laws

There are several laws already in effect or nearing this milestone which pertain broadly to the use of all types of AI technologies in most or all industries and sectors. Some of these include:

- International Privacy Laws: Many privacy laws, such as the General Data Protection Regulation (GDPR) in the EU, and several in a growing number of U.S. states, such as the California Privacy Rights Act (CPRA), already include requirements related to processes typically fulfilled using AI systems. The AI-related requirements in these privacy laws focus primarily on the use of automated decision making and related profiling techniques. Some mandate the implementation of assessments, such as data protection impact assessments, to evaluate the potential risks and implications of these automated processes. Others grant rights to individuals to opt out of having their data included in profiling or automated decision making, especially when there can be legal or other types of significant effects on individuals. There are many such laws already in effect and many more have been passed or are in various stages of the legislative process.

- U.S. Executive Order on Safe, Secure, and Trustworthy Development and Use of AI: The White House issued an executive order aimed at positioning the United States as a leader in AI by addressing various aspects of AI development and deployment. While not strictly speaking a law, U.S. executive orders have essentially the same force and effect. This order issues the most comprehensive measures ever implemented to safeguard Americans from the potential hazards posed by AI systems by directing actions in the following areas:

- New Standards for AI Safety and Security: This includes implementing repeatable standardized evaluations of AI systems, mechanisms for identifying and mitigating risks associated with them, establishing standards for detecting AI-generated content, and fixing vulnerabilities in critical software.

- Protecting Americans’ Privacy: This aims to ensure that the collection, use, and retention of personal information used to train AI models is done responsibly by promoting the development and strengthening of privacy-preserving techniques and technologies.

- Advancing Equity and Civil Rights: This focuses on ensuring that AI systems don’t produce biased outcomes that can exacerbate discrimination and other abuses in areas such as the criminal justice system and the housing market.

- Standing Up for Consumers, Patients, and Students: This includes advancing the responsible use of AI in the healthcare and education systems.

- Supporting Workers: This requires establishing best practices for assessing disruptions to labor markets, minimizing harms, and maximizing potential benefits of AI for U.S. workers.

- Promoting Innovation and Competition: This will be accomplished by investing in education, training, research and development, and talent capacity while promoting a fair and equitable AI ecosystem.

- Advancing American Leadership Abroad: This will help ensure collaboration with other countries to accelerate the development of robust international frameworks for governing the use of AI and promoting safe and responsible use of it to solve global problems.

- Ensuring Responsible and Effective Government Use of AI: This encourages various agencies to advance government use of AI by assisting with acquiring specific AI solutions and accelerating rapid hiring of AI professionals.

This executive order was issued in October 2023. Some provisions have already gone into effect, with most others slated to do so throughout 2024.

- EU AI Act: The European Union (EU) has taken a significant step in regulating artificial intelligence with the introduction of the AI Act. This comprehensive legislation sets out rules and requirements for AI systems, aiming to ensure transparency, accountability, and the protection of fundamental rights. It aims to classify different AI systems based on their level of risk, with criteria that are applicable across all industries. Depending on the level of risk, a given system might be prohibited, approved with contingency to fulfill certain requirements, or require notification to its users. This law will be additive to some of the AI-related requirements already introduced by the GDPR. The AI Act was approved by the European Parliament in May 2023. Though not yet finalized, provisional agreement was reached on the draft rules in December 2023, so the law is expected to go into effect sometime in 2026.

- Greece Legal Framework on Emerging Technologies: Greece has implemented Law 4961/2022, known more commonly as the Legal Framework on Emerging Technologies, which aims to protect the rights of individuals and entities, enhance accountability and transparency in the use of AI systems, and complement the country’s existing cybersecurity framework. It imposes obligations on public bodies using AI systems to ensure transparency and provide information on system parameters and decisions made with AI support. Algorithmic impact assessments are required before operating AI systems, considering the purpose, capabilities, data processing, and potential risks to individuals' rights. It also establishes a Coordinating Committee for AI, an AI Observatory, and a Committee of Data Protection Officers. This law was passed in July 2022 and went into effect in March 2023.

Narrow Laws

There are additional laws already in effect or nearing this milestone that pertain only to the use of certain types of AI technologies or to their use for specific industries and use cases. Some of these include:

- New York City AEDT Law: New York City has implemented Law 144 of 2021, known more commonly as the Automated Employment Decision Tools (AEDT) Law, which imposes restrictions on employers and employment agencies regarding the use of AI systems in all stages of the recruiting and employment process. This includes candidate assessment and screening, hiring and employment decisions, and promotion processes. To comply with this law, employers must conduct a bias audit on each related AI system and provide appropriate notices to candidates before engaging in any processing activities that use them. This law was passed in December 2021. The final version of rules were adopted in April 2023, and enforcement went into effect in July 2023.

- China Generative AI Measures: China has introduced the Interim Measures for Management of Generative Artificial Intelligence Services, known more commonly as the Generative AI Measures, to promote development and security of this fast-growing part of the AI technology landscape. It outlines specific requirements for Chinese companies using generative AI technology, pertaining to the creation of text, pictures, sounds, videos, and other content within China’s jurisdiction. Of particular significance is the requirement for companies to source data for AI models in a manner that upholds the intellectual property rights of individuals and companies. These measures were issued in July 2023 and went into effect in August 2023.

Emerging Laws

In addition to laws which have already been passed or have gone into effect, there are many more AI laws being considered as well. Some of these include:

- Brazil Bill 2338/2023: This bill aims to establish a legal framework for regulating AI systems in Brazil. It focuses on establishing rules for the availability of AI systems, protecting the rights of individuals affected by their operation, imposing penalties for violations, and creating a supervisory body. Notably, the bill outlines requirements for the operation of AI systems, including a preliminary assessment conducted by suppliers to determine if they pose high or excessive risks. This bill was introduced into the senate in December 2022, presented by the president in May 2023, and is still being evaluated.

- Canada AI and Data Act: Bill C-27 (the Digital Charter Implementation Act) covers three proposed pieces of legislation, including the AI and Data Act. This aims to ensure the development and deployment of high-impact AI systems that mitigate risks and bias, establishes an AI and Data Commissioner, and outlines criminal prohibitions and penalties. Bill C-27 was introduced in July 2022 and is still being considered, with speculation that it will pass sometime before Canada’s next federal election in 2025.

Conclusion

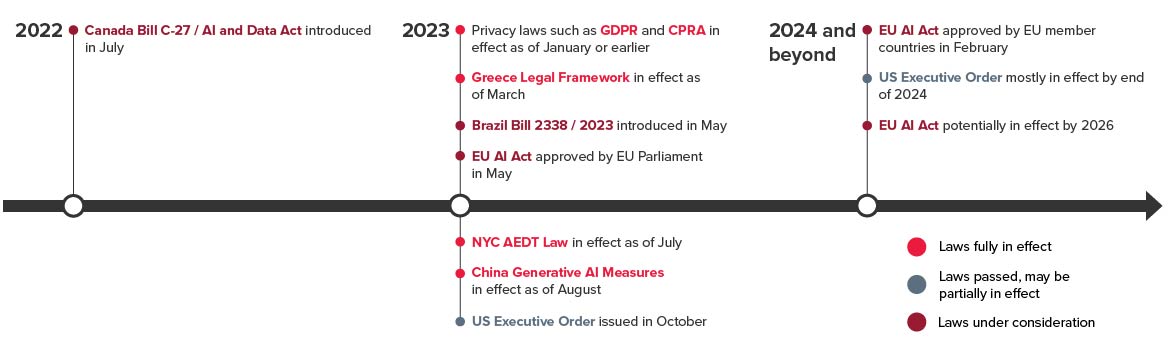

The rapid advancement of AI technologies has brought forth a range of legal implications that must be carefully considered. The legal landscape surrounding these technologies is complex, multifaceted, and continuously evolving. While not meant to be exhaustive, this article highlights many AI-related laws, and the diagram below shows a consolidated timeline of their evolution:

Organizations must be proactive in understanding and adhering to these laws to help ensure the responsible and ethical use of AI. Doing so can help them harness AI’s transformative potential while safeguarding individual rights and meeting regulatory requirements. The upcoming third article in this series will discuss approaches for implementing an ethical AI governance framework for your organization.

Learn more about this and other privacy-related AI matters.

Leaders Should Focus on AI Governance as They Develop Their AI Strategy

A diverse group of stakeholders should contribute to an organization’s AI strategy and governance. This group should include leaders from privacy, security, and compliance who are defining the use cases that are most valuable and impactful. There should also be data scientists who are advising the group on model development and testing.

BDO’s Taryn Crane, Privacy & Data Protection Practice Leader, joined a panel on Nasdaq TradeTalks to talk about AI development, adoption, and compliance.

SHARE